This is great. Keep it up.

And believe it or not, this image is also from Reddit.

That’s great. Keep it up.

ah, “kill yourself,” reddit flavored AI’s 42

And in big, friendly letters it said “LOL GG EZ KYS”

I mean, if you’re googling that without even providing a model number, I can excuse the AI choosing to show it. It’s not a mind reader.

your question is too ambiguous, experts on reddit recommend you terminate your life

If Google were to buy a dataset from WebMD, every AI answer would also include “and you have cancer btw”.

Lol

I miss the old internet

Back where the men were men, the women were men, and the children were FBI agents.

now it’s just dogs and robots, (puts on giddy accent) what a time to be alive!

Can barely hold on to my papers these days

Especially when I’m on my knees, gagging for that Nvidia promo

deleted by creator

Fascinating

Meet the new internet,

same asit’s regurgitating the old internet.Thank God

Define old internet, do you mean forums, usenet, IRC. What exactly is the old internet?

Old internet is where different people had different websites, and they’d link together with web-rings. People would explore and discover and share. The old internet was not dominated by mega-corps. There were no facebook accounts and no google accounts. It was just people sharing their ideas and interests - without trying to turn everything into a side-hustle, or data-harvest or anything like that.

You could find that in darknets, corps don’t do darknets.

Yes

Reddit Simulator 2025

Soon to be replaced by the planned Reddit Stimulator 2026

Is that the one where it feels like someone’s sucking your dick, but you also have to suck a realistic replica of your own dick that tastes shockingly realistic, to make it work?

No, it’s literally just a yoga video geared to get you limber enough to suck your own dick.

Very accurate depiction of Reddit.

I know there is a lot of hate for AI here but it has its uses.

However. I still haven’t found a use for Gemini. What a massive steaming pile of shit llm google have cooked up. It is utterly useless gutter trash that nobody could possibly love.

Pull your finger out google. What the fuck are you doing, you stupid pieces of dog shit?

One of the features I thought it could be wicked useful for is printer clarity. When scanning or copying bad/old papers, hand written, etc. It could clean up, focus, or turn handwriting to print. That would actually be a good use for it IMO, ofc it’ll fuck shit up sometimes but it’ll probably be better than nothing at all

My phone is starting to take better document pictures than my printer. Especially if I hold my phone steady at the right height. I bet if I used a 3D printer to create a stand to hold it for me. Heck I could buy a giant bucket or rack or whatever and bright high color index lights to further improve image clarity to ungodly levels. Still, the Samsung camera app with the AI features already being preloaded that will likely be improved in the next 5 years will just get better. Printers and scanners are completely a pita.

Even Llama gives better results than Gemini. They’re just perpetually behind everyone else. It’s like they took GPT3 and tried to bolt one their search results, let someone forgot to make their search results not suck again for their own internal tool.

Removed by mod

I can literally never reproduce these. I’ve tried several times now.

That just might be fake

At one point they were packing a shitload of usb ports onto the IO panel, 5 stacks of 4 ports wouldnt surprise me

Yeah, I never get these strange AI results.

Except, the other day I wanted to convert some units and the AI results was having a fucking stroke for some reason. The numbers did not make sense at all. Never seen it do that before, but alas, I did not take a screenshot.

deleted by creator

What do humans do? Does the human brain have different sections for language processing and arithmetic?

LLMs don’t verify their output is true. Math is something where verifying its truth is easy. Ask an LLM how many Rs in strawberry and it’s plain to see if the answer is correct or not. Ask an LLM for a summary of Columbian history and it’s not as apparent. Ask an LLM for a poem about a tomato and there really isn’t a wrong answer.

Meanwhile, GNU Units can do that, reliably and consistently, on a freaking 486. 😂

Usually I’ll see something mild or something niche get wildly messed up.

I think a few times I managed to get a query from a post in, but I think they are monitoring for viral bad queries and very quickly massage it one way or another to not provide the ridiculous answer. For example a fair amount of times the AI overview just would be seemingly disabled for queries I found in these sorts of posts.

Also have to contend with the reality that people can trivially fake it and if the AI isn’t weird enough, they will inject a weirdness to get their content to be more interesting.

I’ve been able to reproduce some, like the “how to carry <insert anything here> across a river” one where it always turns it into the fox, goose and grain puzzle.

But generally on anything that’s gone viral, by the time you try to reproduce it someone has already gone in and hard-coded a fix to prevent it from giving the same stupid answer going forward.

At least one dumb one was reproducible, I’d look for it but it was probably a few hundred comments ago

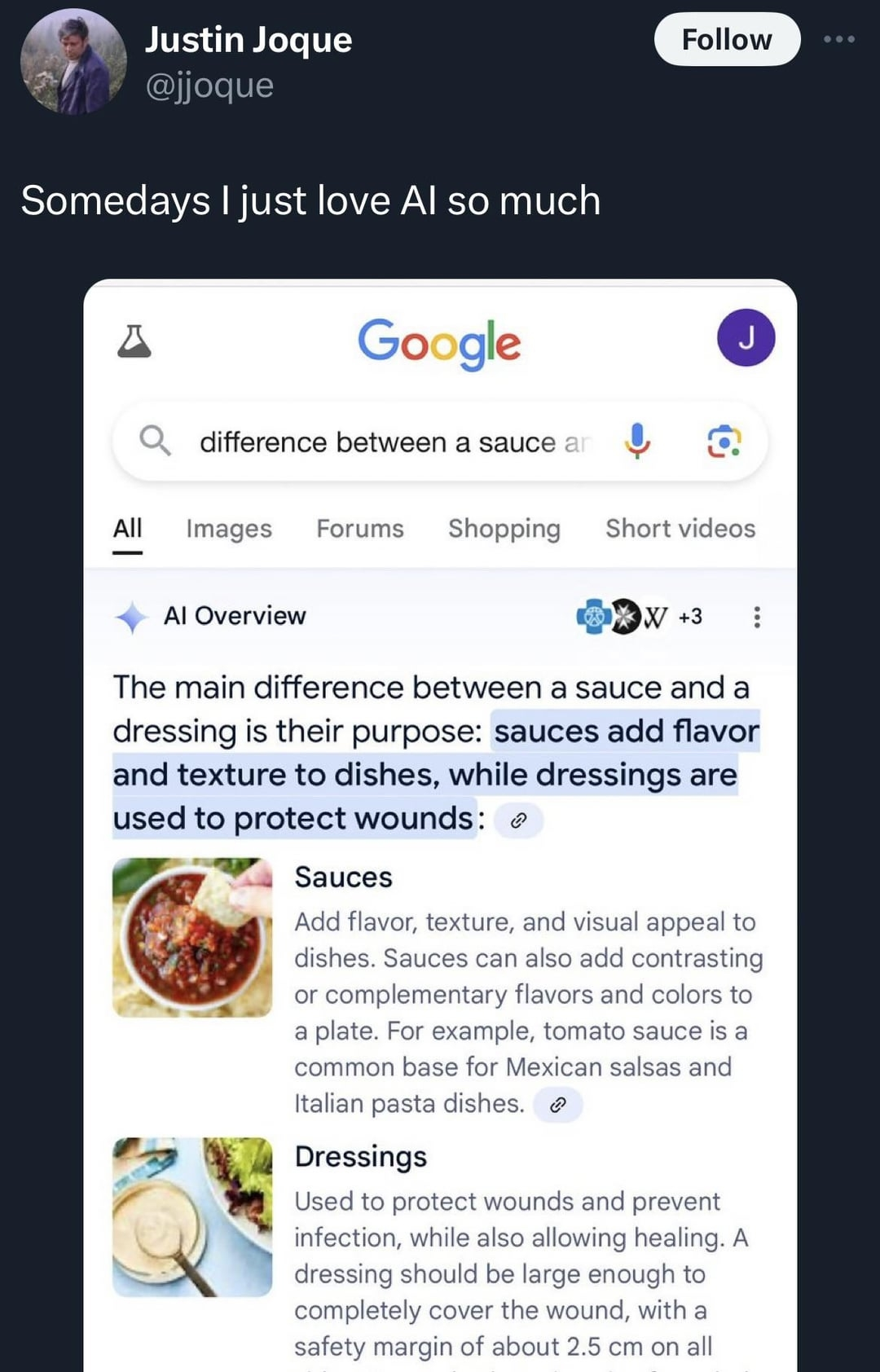

The “sauce vs dressing” one worked for me when I first heard about it, but in the following days it refused to give an AI answer and now has a “reasonable” AI answer

The original, if you haven’t seen it:

Love it :D

Because they’re fake.

I agree. People used to get so mad at me for suggesting that for some reason

Try why I eat Vim?

I don’t get it …?

Seems yours is different than mine, there are two things called vim. There is a Vim text editor and a Vim dishwash bar soap. For some reason Gemini thinks you want to eat the text editor which honestly is very weird and if you scroll down it talks about why it is dangerous to eat soap. By the way the results changed to soap now, seems someone noticed how weird the answer was. This proves just how stupid Gemini is, would you ever think someone eats text editers?

Well I was just saying originally I cannot reproduce the ones that end up as posts. When you wrote this comment here I wasn’t sure what to expect, and after trying the query myself, was further perplexed. I’m not aware of that soap, but yes I did of course think it was a little silly that it needed to tell me I can’t eat vim, but on the other hand for an LLM, that wasn’t the silliest thing I’ve seen firsthand.

My sides cant literally even

can your centers literally odd?

ngl I kinda miss reddit culture. Not that modern reddit has that much of it anymore.

Old reddit culture was just watered down \b\ culture.

Back when it was 90% junk, 5% okay, 4% great… But also that 1% that was absolutely worth it.

Back when Schwarzenegger and T-Pain were basically the highlights. Victoria running the AMAs - I mean that had to be one of the biggest losses. That bird scientist dude and then the drama after he was caught alt upvoting.

Removed by mod

People keep complaining that AI is hallucinating information not in its source material, but here it is strictly limiting itself to the source and even citing its findings and yet everyone is still upset?

Curious

Google is a search engine

Sir, this is a Wendy’s

“Hey, AI summary, how are things going on Reddit?”

“Yeah…”