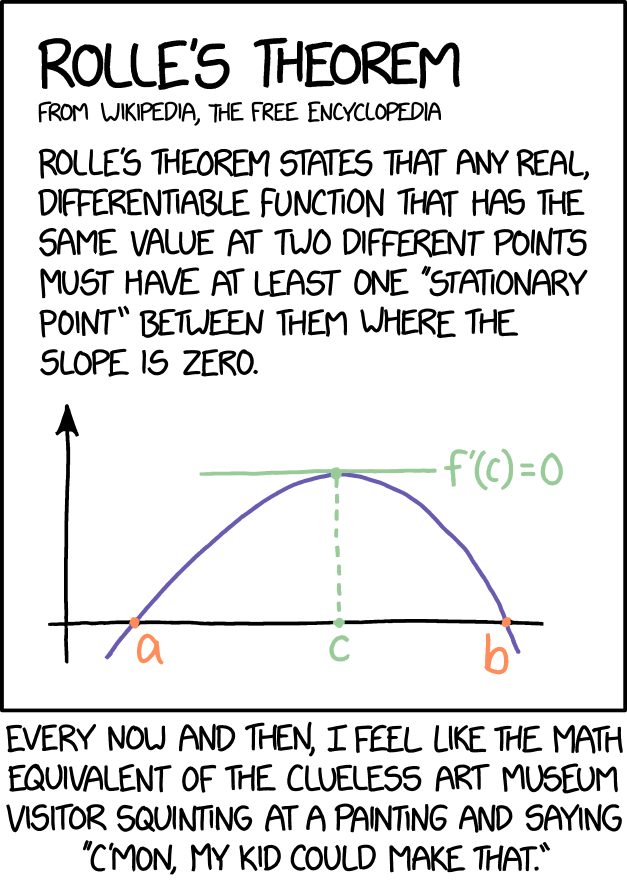

This guy would not be happy to learn about the 1+1=2 proof

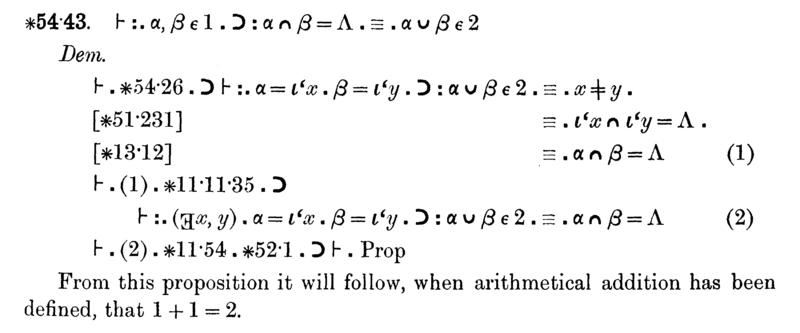

One part of the 360 page proof in Principia Mathematica:

It’s not a 360 page proof, it just appears that many pages into the book. That’s the whole proof.

Weak-ass proof. You could fit this into a margin.

Upvoting because I trust you it’s funny, not because I understand.

It’s a reference to Fermat’s Last Theorem.

Tl;dr is that a legendary mathematician wrote in a margin of a book that he’s got a proof of a particular proposition, but that the proof is too long to fit into said margin. That was around the year 1637. A proof was finally found in 1994.

I thought it must be something like that, I expected it to be more specific though :)

late to the party, but that’s actually one of the heartwarming and ridiculous at the same time stories of mathematics

Principia mathematica should not be used as source book for any actual mathematics because it’s an outdated and flawed attempt at formalising mathematics.

Axiomatic set theory provides a better framework for elementary problems such as proving 1+1=2.

I’m not believing it until I see your definition of arithmetical addition.

Friggin nerds!

A friend of mine took Introduction to Real Analysis in university and told me their first project was “prove the real number system.”

Real analysis when fake analysis enters

I don’t know about fake analysis but I imagine it gets quite complex

Isn’t “1+1” the definition of 2?

That assumes that 1 and 1 are the same thing. That they’re units which can be added/aggregated. And when they are that they always equal a singular value. And that value is 2.

It’s obvious but the proof isn’t about stating the obvious. It’s about making clear what are concrete rules in the symbolism/language of math I believe.

This is what happens when the mathematicians spend too much time thinking without any practical applications. Madness!

The idea that something not practical is also not important is very sad to me. I think the least practical thing that humans do is by far the most important: trying to figure out what the fuck all this really means. We do it through art, religion, science, and… you guessed it, pure math. and I should include philosophy, I guess.

I sure wouldn’t want to live in a world without those! Except maybe religion.

We all know that math is just a weirdly specific branch of philosophy.

Physics is just a weirdly specific branch of math

Only recently

Just like they did with that stupid calculus that… checks notes… made possible all of the complex electronics used in technology today. Not having any practical applications currently does not mean it never will

I’d love to see the practical applications of someone taking 360 pages to justify that 1+1=2

The practical application isn’t the proof that 1+1=2. That’s just a side-effect. The application was building a framework for proving mathematical statements. At the time the principia were written, Maths wasn’t nearly as grounded in demonstrable facts and reason as it is today. Even though the principia failed (for reasons to be developed some 30 years later), the idea that every proposition should be proven from as few and as simple axioms as possible prevailed.

Now if you’re asking: Why should we prove math? Then the answer is: All of physics.

The answer to the last question is even simpler and broader than that. Math should be proven because all of science should be proven. That is what separates modern science from delusion and self-deception

deleted by creator

It depends on what you mean by well defined. At a fundamental level, we need to agree on basic definitions in order to communicate. Principia Mathematica aimed to set a formal logical foundation for all of mathematics, so it needed to be as rigid and unambiguous as possible. The proof that 1+1=2 is just slightly more verbose when using their language.

Using the Peano axioms, which are often used as the basis for arithmetic, you first define a successor function, often denoted as •’ and the number 0. The natural numbers (including 0) then are defined by repeated application of the successor function (of course, you also first need to define what equality is):

0 = 0

1 := 0’

2 := 1’ = 0’’etc

Addition, denoted by •+• , is then recursively defined via

a + 0 = a

a + b’ = (a+b)’which quickly gives you that 1+1=2. But that requires you to thake these axioms for granted. Mathematicians proved it with fewer assumptions, but the proof got a tad verbose

The “=” symbol defines an equivalence relation. So “1+1=2” is one definition of “2”, defining it as equivalent to the addition of 2 identical unit values.

2*1 also defines 2. As does any even quantity divided by half it’s value. 2 is also the successor to 1 (and predecessor to 3), if you base your system on counting (or anti-counting).

The youtuber Vihart has a video that whimsically explores the idea that numbers and operations can be looked at in different ways.

I’ll always upvote a ViHart video.

Or the pigeonhole principle.

That’s a bit of a misnomer, it’s a derivation of the entirety of the core arithmetical operations from axioms. They use 1+1=2 as an example to demonstrate it.

A lot of things seem obvious until someone questions your assumptions. Are these closed forms on the Euclidean plane? Are we using Cartesian coordinates? Can I use the 3rd dimension? Can I use 27 dimensions? Can I (ab)use infinities? Is the embedded space well defined, and can I poke a hole in the embedded space?

What if the parts don’t self-intersect, but they’re so close that when printed as physical parts the materials fuse so that for practical purposes they do intersect because this isn’t just an abstract problem but one with real-world tolerances and consequences?

Yes, the paradox of Gabriel’s Horn presumes that a volume of paint translates to an area of paint (and that paint when used is infinitely flat). Often mathematics and physics make strange bedfellows.

until someone questions your assumptions

Oh, come on. This is math. This is the one place in the universe where all of our assumptions are declared at the outset and questioning them makes about as much sense as questioning “would this science experiment still work in a universe where gravity went the wrong way”. Please just let us have this?

If that were true almost every non trivial proof would be way way wayyyy too long.

It’s all jokes and fun until you meet Riemann series theorem

Tetris’s Theorem: The sum of the series of every Riemann Zero is equal to a number not greater than or less than zero.

yea this is one of those theorems but history is studded with “the proof is obvious” lemmas that has taken down entire sets of theorems (and entire PhD theses)

Yeah, the four color problem becomes obvious to the brain if you try to place five territories on a plane (or a sphere) that are all adjacent to each other. (To require four colors, one of the territories has to be surrounded by the others)

But this does not make for a mathematical proof. We have quite a few instances where this is frustratingly the case.

Then again, I thought 1+1=2 is axiomatic (2 being the defined by having a count of one and then another one) So I don’t understand why Bertrand Russel had to spend 86 pages proving it from baser fundamentals.

Then again, I thought 1+1=2 is axiomatic (2 being the defined by having a count of one and then another one) So I don’t understand why Bertrand Russel had to spend 86 pages proving it from baser fundamentals.

Well, he was trying to derive essentially all of contemporary mathematics from an extremely minimal set of axioms and formalisms. The purpose wasn’t really to just prove 1+1=2; that was just something that happened along the way. The goal was to create a consistent foundation for mathematics from which every true statement could be proven.

Of course, then Kurt Gödel came along and threw all of Russell’s work in the trash.

Saying it was all thrown in the trash feels a bit glib to me. It was a colossal and important endeavour – all Gödel proved was that it wouldn’t help solve the problem it was designed to solve. As an exemplar of the theoretical power one can form from a limited set of axiomatic constructions and the methodologies one would use it was phenomenal. In many ways I admire the philosophical hardball played by constructivists, and I would never count Russell amongst their number, but the work did preemptively field what would otherwise have been aseries of complaints that would’ve been a massive pain in the arse

Someone had to take him down a peg

Smarmy git, strolling around a finite space with an air of pure arrogant certainty.

Yeah, the four color problem becomes obvious to the brain if you try to place five territories on a plane (or a sphere) that are all adjacent to each other.

I think one of the earliest attempts at the 4 color problem proved exactly that (that C5 graph cannot be planar). Search engines are failing me in finding the source on this though.

But any way, that result is not sufficient to proof the 4-color theorem. A graph doesn’t need to have a C5 subgraph to make it impossible to 4-color. Think of two C4 graphs. Choose one vertex from each- call them A and B. Connect A and B together. Now make a new vertex called C and connect C to every vertex except A and B. The result should be a C5-free graph that cannot be 4-colored.

Then again, I thought 1+1=2 is axiomatic (2 being the defined by having a count of one and then another one) So I don’t understand why Bertrand Russel had to spend 86 pages proving it from baser fundamentals.

It is mathematic. Of course it has to be proved.

You only needed to choose 2 points and prove that they can’t be connected by a continuous line. Half of your obviousness rant

prove it then.

It’s fucking obvious!

Seriously, I once had to prove that mulplying a value by a number between 0 and 1 decreased it’s original value, i.e. effectively defining the unary, which should be an axiom.

Mathematicians like to have as little axioms as possible because any axiom is essentially an assumption that can be wrong.

Also proving elementary results like your example with as little tools as possible is a great exercise to learn mathematical deduction and to understand the relation between certain elementary mathematical properties.

So you need to proof x•c < x for 0<=c<1?

Isn’t that just:

xc < x | ÷x

c < x/x (for x=/=0)

c < 1 q.e.d.

What am I missing?

My math teacher would be angry because you started from the conclusion and derived the premise, rather than the other way around. Note also that you assumed that division is defined. That may not have been the case in the original problem.

isnt that how methods like proof by contrapositive work ??

Proof by contrapositive would be c<0 ∨ c≥1 ⇒ … ⇒ xc≥x. That is not just starting from the conclusion and deriving the premise.

i really dont care

Your math teacher is weird. But you can just turn it around:

c < 1

c < x/x | •x

xc < x q.e.d.

This also shows, that c≥0 is not actually a requirement, but x>0 is

I guess if your math teacher is completely insufferable, you need to add the definitions of the arithmetic operations but at that point you should also need to introduce Latin letters and Arabic numerals.

It can’t be an axiom if it can be defined by other axioms. An axiom can not be formally proven

One point on the line

Take 2 points on normal on the opposite sides

Try to connect it

Wow you can’t

This isn’t a rigorous mathematic proof that would prove that it holds true in every case. You aren’t wrong, but this is a colloquial definition of proof, not a mathematical proof.

Sorry, I’ve spent too much of my earthly time on reading and writing formal proofs. I’m not gonna write it now, but I will insist that it’s easy

so… maybe its not worth proving then.

Oh trust me, I believe you. Especially using modern set theory and not the Principia Mathematica.

Only works for a smooth curve with a neighbourhood around it. I think you need the transverse regular theorem or something.

Grated

bich

deleted by creator