Summary

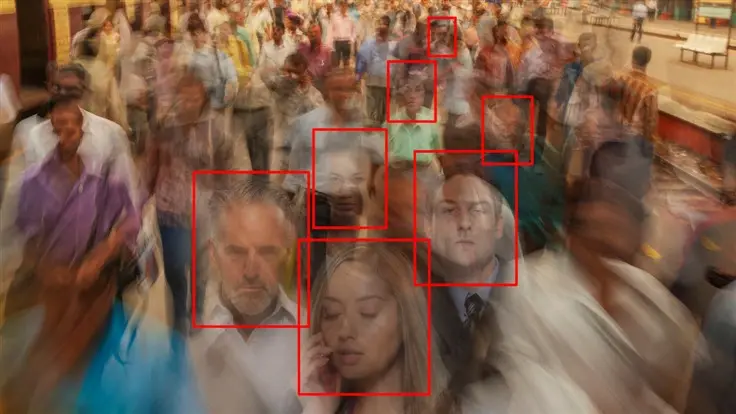

- Detroit woman wrongly arrested for carjacking and robbery due to facial recognition technology error.

- Porsche Woodruff, 8 months pregnant, mistakenly identified as culprit based on outdated 2015 mug shot.

- Surveillance footage did not match the identification, victim wrongly identified Woodruff from lineup based on the 2015 outdated photo.

- Woodruff arrested, detained for 11 hours, charges later dismissed; she files lawsuit against Detroit.

- Facial recognition technology’s flaws in identifying women and people with dark skin highlighted.

- Several US cities banned facial recognition; debate continues due to lobbying and crime concerns.

- Law enforcement prioritized technology’s output over visual evidence, raising questions about its integration.

- ACLU Michigan involved; outcome of lawsuit uncertain, impact on law enforcement’s tech use in question.

Aresting people because AI said so? Let’s fucking go. Can’t wait for the AI model that just return Yes for everything

Maybe that’s how the Matrix happened

Core utils has this AI built in: yes