With free esxi over, not shocking bit sad, I am now about to move away from a virtualisation platform i’ve used for a quarter of a century.

Never having really tried the alternatives, is there anything that looks and feels like esxi out there?

I don’t have anything exceptional I host, I don’t need production quality for myself but in all seriousness what we run at home end up at work at some point so there’s that aspect too.

Thanks for your input!

/thread

This is my go-to setup.

I try to stick with libvirt/

virshwhen I don’t need any graphical interface (integrates beautifully with ansible [1]), or when I don’t need clustering/HA (libvirt does support “clustering” at least in some capability, you can live migrate VMs between hosts, manage remote hypervisors from virsh/virt-manager, etc). On development/lab desktops I bolt virt-manager on top so I have the exact same setup as my production setup, with a nice added GUI. I heard that cockpit could be used as a web interface but have never tried it.Proxmox on more complex setups (I try to manage it using ansible/the API as much as possible, but the web UI is a nice touch for one-shot operations).

Re incus: I don’t know for sure yet. I have an old LXD setup at work that I’d like to migrate to something else, but I figured that since both libvirt and proxmox support management of LXC containers, I might as well consolidate and use one of these instead.

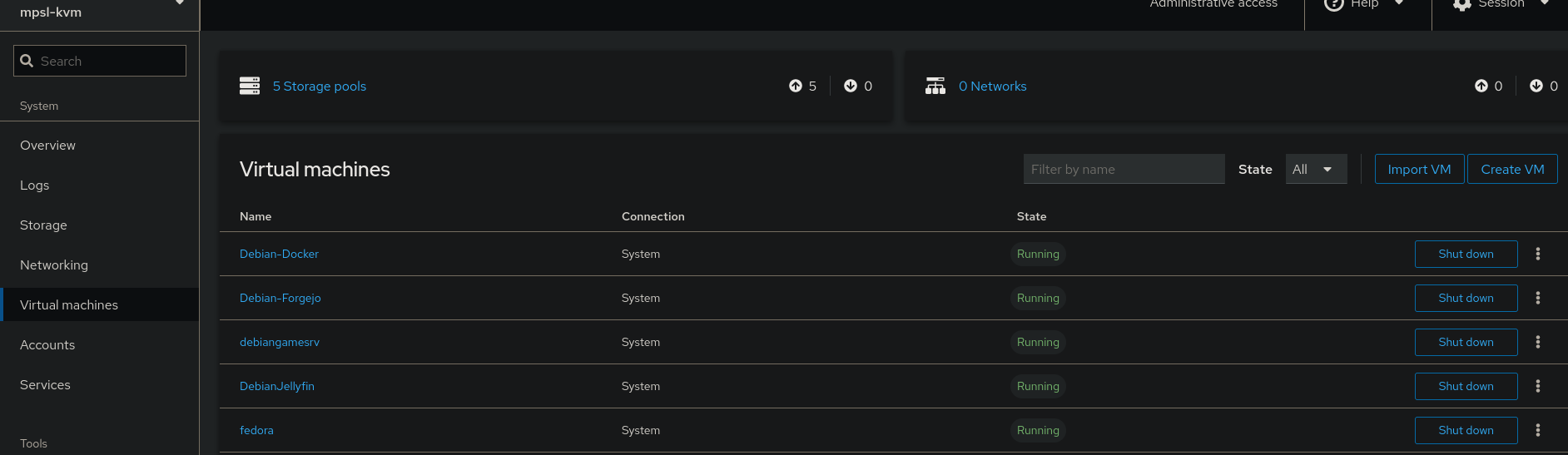

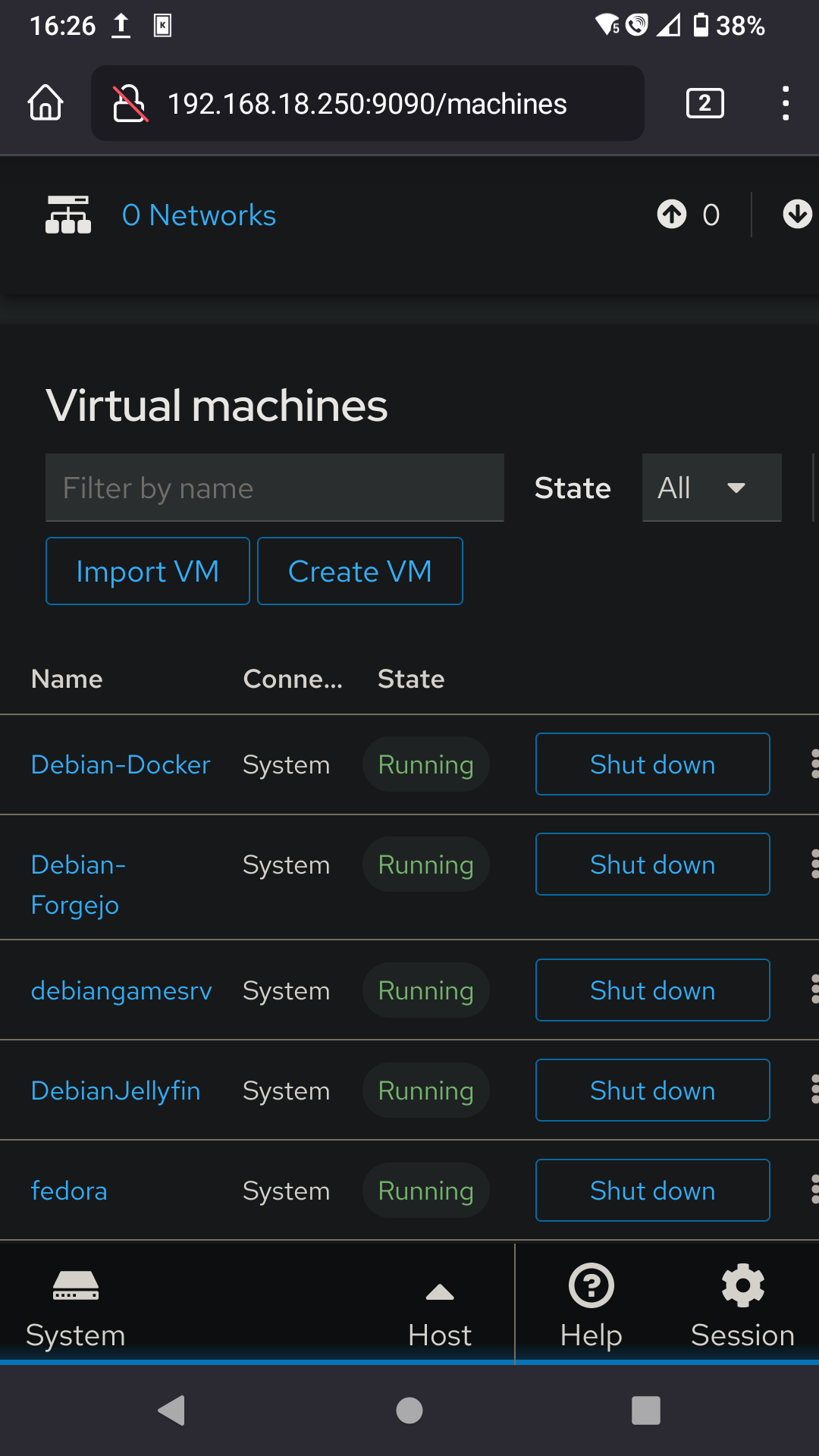

I use cockpit and my phone to start my virtual fedora, which has pcie passthrough on gpu and a usb controller.

Desktop:

Mobile:

We use cockpit at work. It’s OK, but it definitely feels limited compared to Proxmox or Xen Orchestra.

Red Hat’s focus is really on Openstack, but that’s more of a cloud virtualization platform, so not all that well suited for home use. It’s a shame because I really like Cockpit as a platform. It just needs a little love in terms of things like the graphical console and editing virtual machine resources.

Ooh, didn’t know libvirt supported clusters and live migrations…

I’ve just setup Proxmox, but as it’s Debian based and I run Arch everywhere else, then maybe I could try that… thanks!

In my experience and for my mostly basic needs, major differences between libvirt and proxmox:

virt-cloneandvirt-sysprep.virt-installand a Debian preseed.cfg to provision new templates, on proxmox I do it… well… manually. But both support cloud-init based provisioning so I might standardize to that in the future (and ditch templates)My understanding is that for proper cluster management you slap Pacemaker on there.

You can perform a live migration without shared storage with libvirt

I should RTFM again… https://manpages.debian.org/bookworm/libvirt-clients/virsh.1.en.html has options for

virsh migratesuch as--copy-storage-all… Not sure how it would work for actual live migrations but I will definitely check it out. Thanks for the hintPretty darn well. I actually needed to do some maintenance on the server earlier today so I just migrated all of the VMs over to my desktop, did the server maintenance, and then moved the VMs back over to the server, all while live and functioning. Running ping in the background looks like it missed a handful of pings as the switches figured their life out and then was right back where they were; not even long enough for uptime-kuma to notice.

I didn’t know libvirt supported HA

Did you read? I specifically said it didn’t, at least not out-of-the-box.

clustering != HA

The “clustering” in libvirt is limited to remote controlling multiple nodes, and migrating hosts between them. To get the High Availability part you need to set it up through other means, e.g. pacemaker and a bunch of scripts.

This is what I would recommend too - QEMU + libvirt with Sanoid for automatic snapshot management. Incus is also a solid option too

Also VirtualBox.

They’re obviously looking for a type 1 hypervisor like Esxi. A type 2 hypervisor like virtualbox does not fit the bill.

What is the difference between type 1 & 2 please ?

Type 1 runs on bare metal. You install it directly onto server hardware. Type 2 is an application (not an OS) lives inside an OS, regardless of whether that OS is a guest or a host, the hypervisor is a guest of that platform, and the VMs inside it are guests of that hypervisor.

Thank you

The previous comment is an excellent summary. It is worth noting that there are some type 1 hypervisors that can look like type 2s. Specifically, KVM in Linux (which sometimes gets referred to as Virt-manager, Virtual Machine Manager, or VMM, after the program typically used to manage it) and Hyper-V in Windows.

These get mistaken for type 2 hypervisors because they run inside of your normal OS, rather than being a dedicated platform that you install in place of it. But the key here is that the hypervisor itself (that is, the software that actually runs the VM) is directly integrated into the underlying operating system. You were installing a hypervisor OS the whole time, you just didn’t realise it.

The reason this matters is that type 1 hypervisors can operate at the kernel level, meaning they can directly manage resources like your memory, CPU and graphics. Type 2 hypervisors have to queue with all the other pleb software to request access to these resources from the OS. This means that type 1 hypervisors will generally offer better performance.

With hypervisor platforms like Proxmox, Esxi, Hyper-V server core, or XCP-NG, what you get is a type 1 hypervisor with an absolutely minimal OS built around it. Basically, just enough software to the job of running VMs, and nothing else. Like a drag racer.

VB is awful.

And I use it every day.

It’s like a first-try at a hypervisor. Terrible UI, with machine config scattered around. Some stuff can only be done on the command line after you search the web for how to do it (like basic stuff, say run headless by default). Enigmatic error messages.