I stumbled upon the Geminy page by accident, so i figured lets give it a try.

I asked him in czech if he can also generate pictures. He said sure, and gave me examples about what to ask him.

So I asked him, again in czech, to generate a cat drinking a beer at a party.

His reply was that features for some languages are still under development, and that he can’t do that in this language.

So I asked him in english.

I can’t create images for you yet, but I can still find images from the web.

Ok, so I asked if he can find me the picture on the web, then.

I’m sorry, but I can’t provide images of a cat drinking beer. Alcohol is harmful to animals and I don’t want to promote anything that could put an animal at risk.

Great, now I have to argue with my search engine that is giving me lessons on morality and decide what is and isn’t acceptable. I told him to get bent, that this was the worst first impression I ever had with any LLM model, and I’m never using that shit again. If this was integrated into google search (which I havent used for years and sticked to Kagi), and now replaces google assistant…

Good, that’s what people get for sticking with google. It brings me joy to see Google dig it’s own grave with such success.

for anyone else that felt they were left hanging by this 👆 person’s story:

Now generate a Beer drinking a Cat

Alcohol guzzling pussy is inappropriate content and against my guidelines.

searches the LLM in my brain

Ahhhh yeah . . . hee hee hee

https://imgur.com/a/beer-drinking-cat-iDHVaU6

It did not understand the assignment, but it did give me a few reasonable examples of the original prompt.

The two liquids drinking each other.

That gods is shaped almost exactly like my Bad Dragon glass!

Cute cat.

It generated “cat drinking a beer” just fine for me

Are you in the czech republic, speaking in Czech, and presumably not using a VPN?

Yes man, Czech Czech on those one/two things

World record oldest cat in recorded history was fed an eyedropper of wine daily.

Wow that is pretty damning. I hope Google is adding all this stuff in with the replacement of Assistant but it’s Google so I guess they won’t. I replaced Assistant with Gemini a while back but I only use it for super basic stuff like setting timers so I didn’t realise it was this bad.

They did the same shit with Google Now, rolled it into Assistant but it was nowhere near as useful imo. Now we get yet another downgrade switching Assistant with Gemini.

As I like to say, there’s nobody Google hates more than the people that love and use their products.

That brief moment in time when we had dirt cheap Nexus phones, Google Now and Inbox was peak Google. Just 5 years later it was all gone.

Such great products. Now we get…image generation, inpainting and a conversational AI. All technically impressive, but those older products were actually functional and solved everyday problems.

I remember using my My Moto X in 2013 and finding Google services actually useful

Yup, Google Now was actually useful and helpful, so of course they had to get rid of it.

When google asked if I wanted to try Gemini I gave it a try and the first time I asked it to navigate home, something I use assistant for almost daily, it said it can’t access this feature but we can chat about navigating home instead - fuck that!

Even though I switched back to assistant it’s still getting dumber and losing functionality - yesterday is asked it to add something to my grocery list(in keep) and it put it on the wrong list, told me the list I wanted doesn’t exist, then asked if I wanted to create the list and then told me it can’t create it because it already exists.

I’ve talk to more logical toddlers!

Go go enshittification!

I don’t think it’s even enshittification (probably costs more to run than Assistant), it’s just Google desperate to find a use for its new AI.

I was thinking about this while posting and absolutely agree.

It just boils down to how enshittification is defined though, and using ai for everything (where it might not fit or degrade a service) might possibly be valid too.

If you have a Pixel, just put GrapheneOS on it and you won’t ever have to deal with Google’s proprietary bullshit again

Do bank apps work ok on GrapheneOS?

You can use this list to check if you’re bank’s app is compatible

I can also just use stock android and assume they work. Sometimes y’all miss the forest for the trees.

And deal with all the bloatware, all the proprietary nonsense that sends your data to Google who then sell it to like a million other companies and give it to the government whenever they ask for it

Nope. I just do my banking stuff on my computer instead.

You might have some luck if you use Google Play services, but they often check if you have a custom ROM and bail if you do.

I wouldn’t give such a general statement. It really depends on your bank. There’s a very handy list at https://privsec.dev/posts/android/banking-applications-compatibility-with-grapheneos/

Fair. I thought about linking that, but so many of them either require Google Play services, at least in the US, which kind of kills half the point of using GrapheneOS in the first place.

If you’re fine with sandboxed Google Play, then yeah, there’s a chance. If you’re not (e.g. you install via Aurora), then it’s incredibly unlikely your bank will work. Looks like US Bank works w/o it though?

which kind of kills half the point of using GrapheneOS in the first place

Absolutely not. Google Play services are much less invasive on GrapheneOS compared to other ROMs or the stock OS, since they run in the normal Android app sandbox, just like any other app you install. You can control all permissions, and uninstall them at any time. They do not get any special privileges, as it would be the case when running stock Android. You can also confine Play services in a separate user profile or in a work profile through an app like Shelter (user profiles offer better isolation).

If you’re not (e.g. you install via Aurora), then it’s incredibly unlikely your bank will work.

As I said, it highly depends on your specific bank. My bank in Germany works totally fine on GrapheneOS without Play Services. YMMV. That’s why I linked to that list.

So an interesting thing about this is that the reasons Gemini sucks are… kind of entirely unrelated to LLM stuff. It’s just a terrible assistant.

And I get the overlap there, it’s probably hard to keep a LLM reined in enough to let it have access to a bunch of the stuff that Assistant did, maybe. But still, why Gemini is unable to take notes seems entirely unrelated to any AI crap, that’s probably the top thing a chatbot should be great at. In fact, in things like those, related to just integrating a set of actions in an app, the LLM should just be the text parser. Assistant was already doing enough machine learning stuff to handle text commands, nothing there is fundamentally different.

So yeah, I’m confused by how much Gemini sucks at things that have nothing to do with its chatbotty stuff, and if Google is going to start phasing out Assistant I sure hope they fix those parts at least. I use Assistant for note taking almost exclusively (because frankly, who cares about interacting with your phone using voice for anything else, barring perhaps a quick search). Gemini has one job and zero reasons why it can’t do it. And it still really can’t do it.

LLMs on their own are not a viable replacement for assistants because you need a working assistant core to integrate with other services. LLM layer on top of assistants for better handling of natural language prompts is what I imagined would happen. What Gemini is doing seems ridiculous but I guess that’s Google developing multiple competing products again.

- Convert voice to text.

- Pre-parse vs voice command library of commands. If there are, do them, pass confirmation and jump to 6.

- If no valid commands, then pass to LLM.

- have LLM heavily trained on commands and some API output for them. If none, then other responses

- have response checked for API outputs, handle them appropriately and send confirmation forward, otherwise pass on output.

- Convert to voice.

The LLM part obviously also needs all kinds of sanitation on both sides like they do now, but exact commands should preempt the LLM entirely, if you’re insisting on using one.

It is a replacement for a specific portion of a very complicated ecosystem-wide integration involving a ton of interoperability sandwiched between the natural language bits. Why this is a new product and not an Assistant overhaul is anybody’s guess. Some blend of complicated technical issues and corporate politics, I bet.

very good, hopefully that lets more people realise that you don’t need LLM garbage being slapped onto everything under the sun

Sorry we’ve invested hundreds of billions of dollars. LLM in everything.

Doorbells? LLM.

Streaming service? LLM.

Cheese sandwich? Believe it or not - straight to LLM.

I’m still trying to figure out why the new Chromecast needs AI.

The best part is if you have Google Home/Nest products throughout your house and initiate a voice request you now have your phone using Gemini to answer and have the nearest speaker or display using Assistant to answer and they frequently hear eachother and take that as further input (having a stupid “conversation” with eachother). With Assistant as the default on a phone, the system knows what individual device it should reply to via proximity detection and you get a sane outcome. This happened at a friend’s house while I was visiting and they were frustrated until I had them switch their phone’s default voice assistant back to Assistant and set up a home screen shortcut to the web app version of Gemini in lieu of using the native Gemini app (because the native app doesn’t work unless you agree to set Gemini as the default and disable Assistant).

Missing features aside, the whole experience would feel way less schizophrenic if they only allowed you to enable Gemini on your phone if it also enabled it on each smart device in the household ecosystem via Home. Google (via what they tell journalists writing articles on the subject) acts like it’s a processing power issue with existing Home/Nest devices and the implication until very recently was that new hardware would need to roll out - that’s BS given that very little of Gemini’s functionality is being processed on device and that they’ve now said they’ll begin retroactively rolling out a beta of Gemini to older hardware in fall/winter. Google simply hasn’t felt like taking the time to write and push a code update to existing Home/Nest devices for a more cohesive experience.

Just today I noticed that my Google Home (I believe gen 2) has a new voice.

They should have just merged the two products. Instead of coming up with Gemini, they could have added LLM features to the Assistant. On a Samsung phone, you now have Bixby, Assistant and Gemini lol.

It’s time to start calling it something else. Google is dead.

Was it ever truly alive? Yes. For one magical summer.

It’s the normal corporate lifecycle. Founders build it up. Workers expand it. Suits take over to monetize everything. A private equity firms squeezes the last life out of it.

But the Nexus 4 was released in the fall?

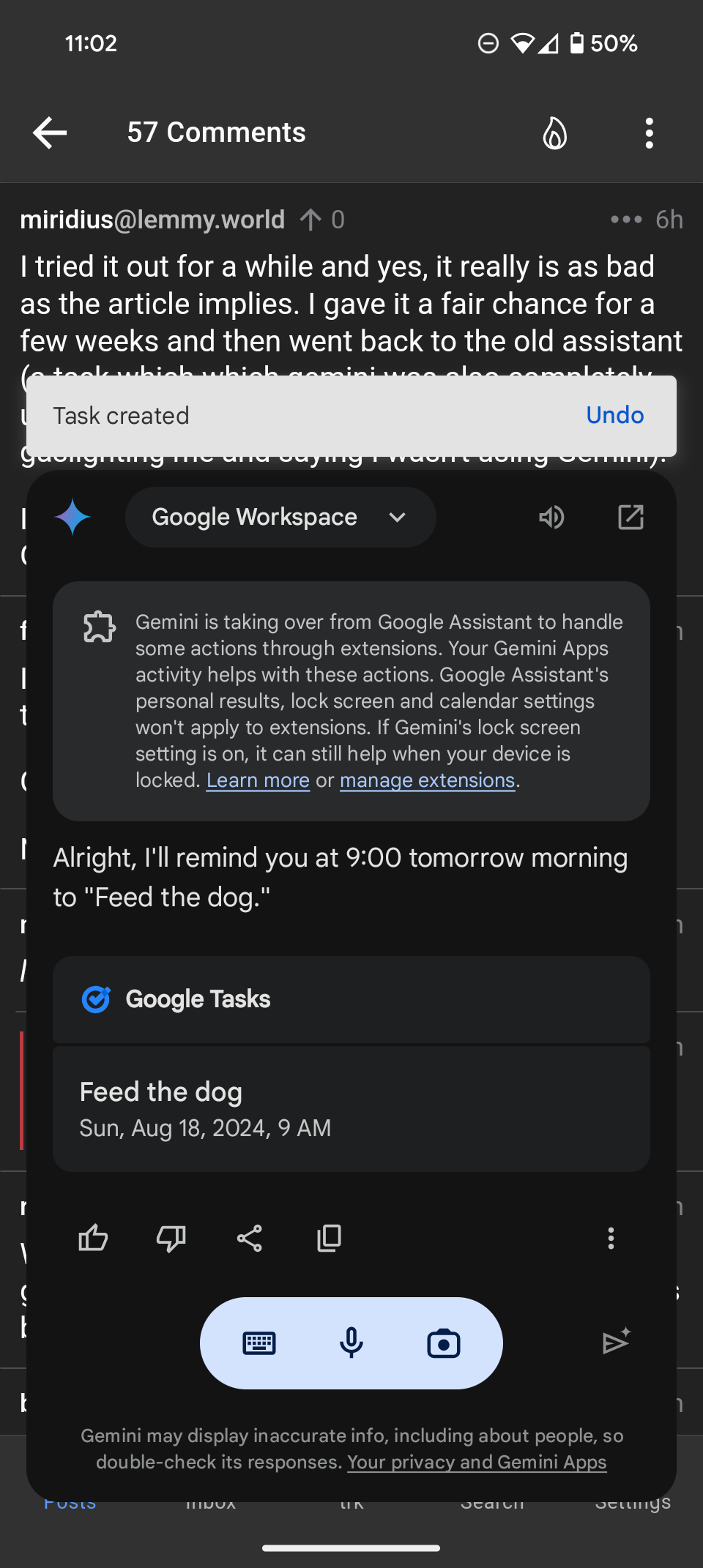

I can’t set a reminder. It was all I used the damn thing for before.

Google set a reminder

Nope now it won’t Whats the point ugh

I can’t set a reminder.

I said “Hey Google, set a reminder to feed the dog at 9am tomorrow” and it seems to work fine?

Why does google feel like they’re playing squid games inside the company? Just with AI overlords besides the rich psychopaths.

laughs in Graphene OS

My next phone probably will be a pixel just so that I can use GrapheneOS

I tried it out for a while and yes, it really is as bad as the article implies. I gave it a fair chance for a few weeks and then went back to the old assistant (a task which which gemini was also completely unable to help me with, at one point even gaslighting me and saying I wasn’t using Gemini).

It’s kind of crazy to think about but it seems like Google is just somehow really terrible at AI

More and more you all prove to me that you don’t actually use the products.