- cross-posted to:

- technology@beehaw.org

- cross-posted to:

- technology@beehaw.org

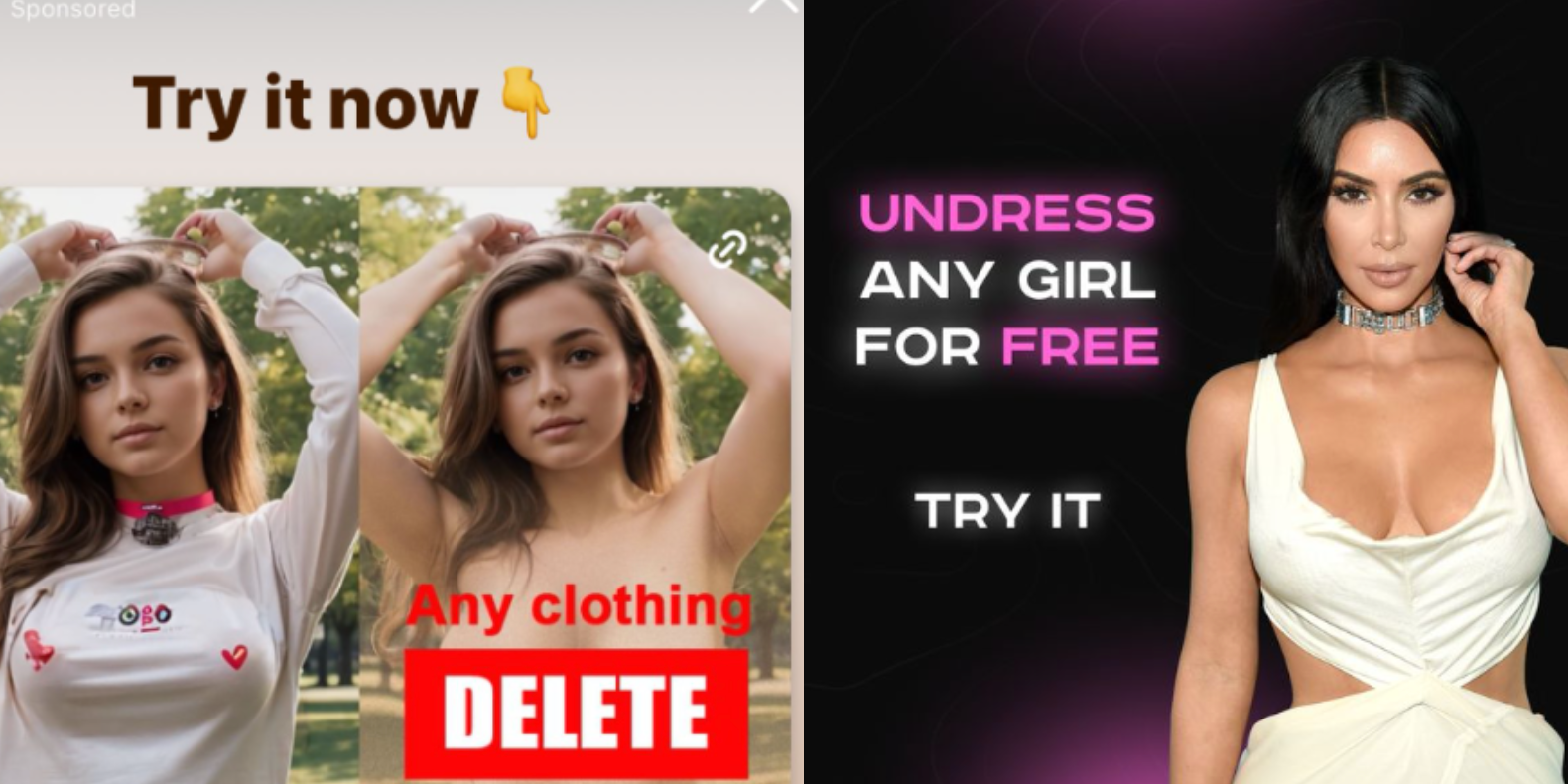

Instagram is profiting from several ads that invite people to create nonconsensual nude images with AI image generation apps, once again showing that some of the most harmful applications of AI tools are not hidden on the dark corners of the internet, but are actively promoted to users by social media companies unable or unwilling to enforce their policies about who can buy ads on their platforms.

While parent company Meta’s Ad Library, which archives ads on its platforms, who paid for them, and where and when they were posted, shows that the company has taken down several of these ads previously, many ads that explicitly invited users to create nudes and some ad buyers were up until I reached out to Meta for comment. Some of these ads were for the best known nonconsensual “undress” or “nudify” services on the internet.

Its funny how many people leapt to the defense of Title V of the Telecommunications Act of 1996 Section 230 liability protection, as this helps shield social media firms from assuming liability for shit like this.

Sort of the Heads-I-Win / Tails-You-Lose nature of modern business-friendly legislation and courts.

Section 230 is what allows for social media at all given the problem of content moderation at scale is still unsolved. Take away 230 and no company will accept the liability. But we will have underground forums teeming with white power terrorists signalling, CSAM and spam offering better penis pills and Nigerian princes.

The Google advertising system is also difficult to moderate at scale, but since Google makes money directly off ads, and loses money when YouTube content is not brand safe, Google tends to be harsh on content creators and lenient on advertisers.

It’s not a new problem, and nudification software is just the latest version of X-Ray Specs (which is to say weve been hungry to see teh nekkid for a very long time.) The worst problem is when adverts install spyware or malware onto your device without your consent, which is why you need to adblock Forbes Magazine…or really just everything.

However much of the world’s public discontent is fueled by information on the internet (Some false, some misleading, some true. A whole lot more that’s simultaneously true and heinous than we’d like in our society). So most of our officials would be glad to end Section 230 and shut down the flow of camera footage showing police brutality, or starving people in Gaza or fracking mishaps releasing gigatons of rogue methane into the atmosphere. Our officials would very much love if we’d go back to being uninformed with the news media telling us how it’s sure awful living in the Middle East.

Without 230, we could go back to George W. Bush era methods, and just get our news critical of the White House from foreign sources, and compare the facts to see that they match, signalling our friends when we detect false propaganda.