XL Turbotastic Mega Ginormous, etc. Hate naming schemes like this. Why not just make it v2.0 or the Pro version instead? Why use multiple words that make it sound bigger and better? Marketing BS that just sounds dumb.

Not sure why you have a problem with it, the naming here makes a lot of sense if you know the context.

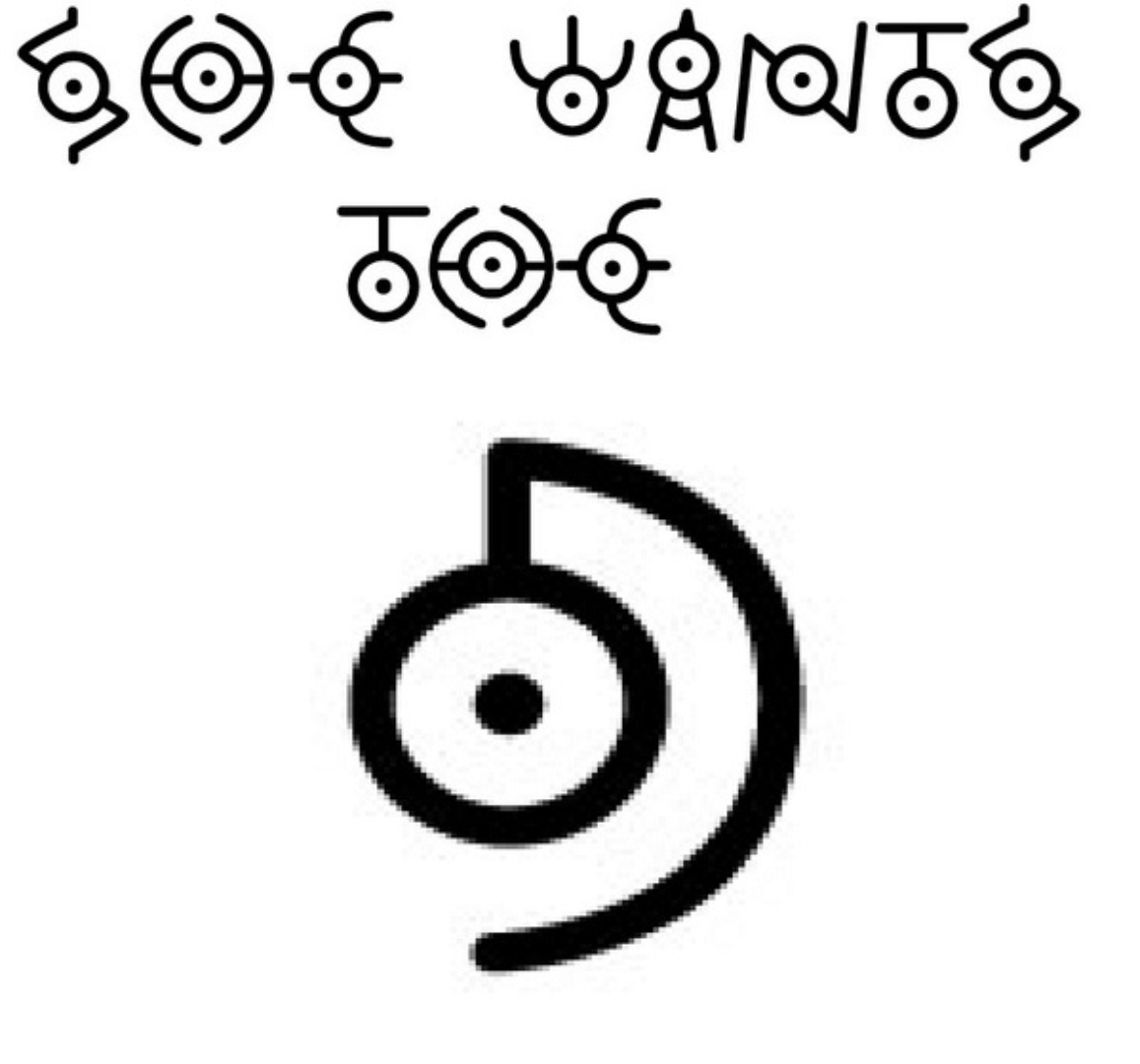

Stable Diffusion --> The original SD with versions like 1.5, 2.0, 2.1 etc

Stable Diffusion XL --> A version of SD with much bigger training data and support for much larger resolutions (hence, XL)

Stable Diffusion XL Turbo --> A version of SDXL that is much faster (hence, Turbo)

They have different names because they’re actually different things, it’s not exactly a v1.0 --> v2.0 scenario

Thanks for the context. That does make it much less redundant.

Naming schemes that aren’t clear are absolute garbage.

What if you’re new to it, and there are 6 different recent versions of something all named with a description instead of version number? Is Jumbo newer than Mega?

Fuck it, I’m ranting about this because it still upsets me.

I wanted to buy a 3DS to play Shovel Knight and Binding of Issac. Reading up on them, BoI would only play on a New 3DS XL. Cool.

Went to the store and bought a new 3DS XL only to find out I got the wrong one. What I wanted was a NEW 3DS XL, and what I got was a 3DS XL that was new. There is a difference, and it took me 4 days to notice, and I was working out of town for the next month. So I can’t return it. FUN!

So screw naming new versions of things with names instead of numbers. But somehow, Microsoft screwed that one up.

KISS: Keep it simple, stupid.

Sure, 3DS names are dumb, but this is definitely not the case here. Using version numbers instead of different names for different things causes insane confusion and having to over-explain what it is.

See: DLSS

DLSS 2 is just DLSS 1 but better. DLSS 3 is frame generation that isn’t compatible with most hardware. DLSS 3.5 is similar to DLSS 2 but includes enhanced raytracing denoising.

It’s a nightmare. Making a version 2, 3, 4 etc of something also makes it sound like there’s no reason to use the old version, whereas a lot of people are still using the regular stable diffusion over stable diffusion XL.

Imagine if the discussion was “Hey don’t use Stable Diffusion 3 since you need a lot of VRAM, you should be using Stable Diffusion 1.5 or Stable Diffusion 2.1, but also it’s worth getting a new GPU for Stable Diffusion 4 cuz it’s very fast but has lower quality than version 3”

Why not just make it v2.0 or the Pro version instead?

“Pro version” is equally cringe.

Yeah I get that. Would just have made more sense given that it’s widely used. Though I’ve been told why the name is so weird and it makes some sense now

Here are my suggestions:

Stable Diffusion Free

Stable Diffusion Paid with Limitations

Stable Diffusion Paid Unlimited

I agree with you in general, but for Stable Diffusion, “2.0/2.1” was not an incremental direct improvement on “1.5” but was trained and behaves differently. XL is not a simple upgrade from 2.0, and since they say this Turbo model doesn’t produce as detailed images it would be more confusing to have SDXL 2.0 that is worse but faster than base SDXL, and then presumably when there’s a more direct improvement to SDXL have that be called SDXL 3.0 (but really it’s version 2) etc.

It’s less like Windows 95->Windows 98 and more like DOS->Windows NT.

That’s not to say it all couldn’t have been better named. Personally, instead of ‘XL’ I’d rather they start including the base resolution and something to reference whether it uses a refiner model etc.

(Note: I use Stable Diffusion but am not involved with the AI/ML community and don’t fully understand the tech – I’m not trying to claim expert knowledge this is just my interpretation)

AFAIU SDXL is actually an erm genetic descendant of SD1.5, with its architecture expanded, weights transferred from 1.5, and then trained on bigger inputs (512x512 in the end is awfully small). SD2.0 is a completely new model, trained from scratch and as far as I’m aware noone’s actually using it. Also noone is using the SDXL refiner if you go to civitai it’s all models with detailer capabilities baked in, what you do see is workflows that generate an image, add some noise at the very end and repeat the last couple of steps. Using the base sdxl refiner on the output of other sdxl models is sometimes right-out comical because it sometimes has no idea what it’s looking at and then produced exquisitely surface texture details of the wrong material. Say a silk keyboard because it doesn’t realise that it’s supposed to be ABS and, well, black silk exists.

Yeah I got some good replies to my comment explaining it. Makes more sense now.

deleted by creator

deleted by creator

I heard they were all child murderers! 😱

Removed by mod

deleted by creator

You might be waiting a long time. We aren’t going back and this is one of those things that are not going back into the box. So now we must prepare for it and learn to live with it as the best course of action and make sure it’s not used to oppress us.

Agreed. It is similar to waiting for Photoshop to die. It’s not going away.

Removed by mod

Lmao I’m old enough to remember “the internet is just a fad”

deleted by creator

What’s out there that actually works offline? Stable Diffusion is the only one I’ve heard about, everyone else is more interested in exclusively selling AI as a service.

Llama etc, reduced ChatGPT models. Never tried them but they’re out there. There’s also plenty of support stuff that may or may not be interesting, e.g. turning images into depth maps in case you don’t have enough angles for actual photogrammetry. controlnet-aux for comfyui has a good selection of that analysis stuff.

This isn’t free BTW folks

I haven’t messed with any AI imaging stuff yet. And free recommendations to just have some fun?

deleted by creator

Bing and Open AI still and free stuff. Bing’s is actually really good.

Great, even more online noise that I can look forward to.

And the resulting faces still all have lazy eyes, asymmetric features, and significantly uncanny issues.

Humans have asymmetric features. No one is symmetrical

These features are abnormally asymmetric to the point of being off-putting. General symmetry of features is a significant part of what attracts people one to another, and why facial droops from things like Bells Palsy or strokes can often be psychologically difficult for the patient who experiences them.

General symmetry, not exact symmetry.

Anecdote: I think Denzel Washington is supposed to have one of the most symmetrical faces.

Removed by mod

I’ve tried to install this multiple times but always manage to fuck it up somehow. I think the guides I’m following are outdated or pointing me to one or more incompatible files.

Tough luck running any code published by people who put out models, it’s research-grade software in every sense of the word. “Works on my machine” and “the source is the configuration file” kind of thing.

Get yourself comfyui, they’re always very fast when it comes to supporting new stuff and the thing is generally faster and easier on VRAM than A1111. Prerequisite is a torch (the python package) enabled with CUDA (nvidia) or rocm (AMD) or whatever Intel uses. Fair warning: Getting rocm to run on not officially supported cards is an adventure in itself, I’m still on torch-1.13.1+rocm5.2 newer builds just won’t work as the GPU I’m telling rocm I have so that it runs in the first place supports instructions that my actual GPU doesn’t, and they started using them.

Do you use comfyui ?

That’s impressive

This is great news for people who make animations with deforum as the speed increase should make Rakile’s deforumation GUI much more usable for live composition and framing.

This is the best summary I could come up with:

Stability detailed the model’s inner workings in a research paper released Tuesday that focuses on the ADD technique.

One of the claimed advantages of SDXL Turbo is its similarity to Generative Adversarial Networks (GANs), especially in producing single-step image outputs.

Stability AI says that on an Nvidia A100 (a powerful AI-tuned GPU), the model can generate a 512×512 image in 207 ms, including encoding, a single de-noising step, and decoding.

This move has already been met with some criticism in the Stable Diffusion community, but Stability AI has expressed openness to commercial applications and invites interested parties to get in touch for more information.

Meanwhile, Stability AI itself has faced internal management issues, with an investor recently urging CEO Emad Mostaque to resign.

Stability AI offers a beta demonstration of SDXL Turbo’s capabilities on its image-editing platform, Clipdrop.

The original article contains 553 words, the summary contains 138 words. Saved 75%. I’m a bot and I’m open source!

Does it actually run any faster though? For instance, if I manually spun a model with the diffusers library and ran it locally on dml, would there be any difference?

Edit: Assuming we’re normalizing the output to something reasonable, e.g. a recognizable picture of a dog.