Have you used AI to code? You don’t say “hey, write this file” and then commit it as “AI Bot 123 aibot@company.com”.

You start writing a method and get auto-completes that are sometimes helpful. Or you ask the bot to write out an algorithm. Or to copy something and modify it 30 times.

You’re not exactly keeping track of everything the bots did.

yeah, that’s… one of the points in the article

I’ll admit I skimmed most of that train wreak of an article - I think it’s pretty generous saying that it had a point. It’s mostly recounts of people complaining about AI. But if they hid something in there about it being remarkably useful in cases but not writing entire applications or features then I guess I’m on board?

Well, sometimes I think the web is flooded with advertising an spam praising AI. For these companies, it makes perfect sense because billions of dollars has been spent at these companies and they are trying to cash in before the tides might turn.

But do you know what is puzzling (and you do have a point here)? Many posts that defend AI do not engage in logical argumentation but they argue beside the point, appeal to emotions or short-circuited argumentation that “new” always equals “better”, or claiming that AI is useful for coding as long as the code is not complex (compare that to the objection that mathematics is simple as long it is not complex, which is a red herring and a laughable argument). So, many thanks for you pointing out the above points and giving in few words a bunch of examples which underline that one has to think carefully about this topic!

The problem is that you really only see two sorts of articles.

AI is going to replace developers in 5 years!

AI sucks because it makes mistakes!

I actually see a lot more of the latter response on social media to the point where I’m developing a visceral response to the phrase “AI slop”.

Both stances are patently ridiculous though. AI cannot replace developers and it doesn’t need to be perfect to be useful. It turns out that it is a remarkably useful tool if you understand its limitations and use it in a reasonable way.

it’s a car that only explodes once in a blue moon!

No, it’s a car that breaks down once you go faster than 60km/h. It’s extremely useful if you know what you’re doing and use it only for tasks that it’s good at.

if that’s the analogy yoou want, make it 20 kmh

deleted by creator

Great analogy! Even in this thread there are heaping amount of copium with people saying, “Meh, ai will never be able to do my job.”

I fucking promise in 5 years, ai will be doing the job they have right now. lol

Hey @dgerard@awful.systems, care to weigh in on this “train wreak [sic] of an article?”

I asked Github Copilot and it added

import wreakto .NET, so we’ll get back to you.

Or to copy something and modify it 30 times.

This seems like a very bad idea. I think we just need more lisp and less AI.

“Hey AI - Create a struct that matches this JSON document that I get from a REST service”

Bam, it’s done.

Or

"Hey AI - add a schema prefixed on all of the tables and insert statements in the SQL script.

People have such a hate boner for AI here that they are downvoting actual good use of it…

Good point.

This is the point that the “AI will do it all” crowd is missing. Current AI doesn’t innovate. Full stop. It copies.

The need for new code written by folks who understand what they’re writing isn’t gone, and won’t go away.

Whether those folks can be AI is an open question.

Whether we can ever create an AI that can actually innovate is an interesting open question, with little meaningful evidence in either direction, today.

I used it only as last resort. I verify it before using it. I only had used it for like .11% of my project. I would not recommend AI.

My dude, I very code other humans write. Do you think I’m not verifying code written by AI?

I highly recommend using AI. It’s much better than a Google search for most things.

It’s not good because it has no context on what is correct or not. It’s constantly making up functions that don’t exist or attributing functions to packages that don’t exist. It’s often sloppy in its responses because the source code it parrots is some amalgamation of good coding and terrible coding. If you are using this for your production projects, you will likely not be knowledgeable when it breaks, it’ll likely have security flaws, and will likely have errors in it.

So you’re saying I’ve got a shot?

And I’ll keep saying this: you can’t teach a neural network to understand context without creating a generalised context engine, another word for which is AGI.

Fidelity is impossible to automate.

If humans are so good at coding, how come there are 8100000000 people and only 1500 are able to contribute to the Linux kernel?

I hypothesize that AI has average human coding skills.

Average drunk human coding skils

Well according to microsoft mildly drunk coders work better

A million drunk monkeys on typewriters can write a work of Shakespeare once in a while!

But who wants to pay a 50$ theater ticket in the front seat to see a play written by monkeys?

The average coder is a junior, due to the explosive growth of the field (similar as in some fast-growing nations the average age is very young). Thus what is average is far below what good code is.

On top of that, good code cannot be automatically identified by algorithms. Some very good codebases might look like bad at a superficial level. For example the code base of LMDB is very diffetent from what common style guidelines suggest, but it is actually a masterpiece which is widely used. And vice versa, it is not difficult to make crappy code look pretty.

“Good code” is not well defined and your example shows this perfectly. LMDBs codebase is absolutely horrendous when your quality criterias for good code are Readability and Maintainability. But it’s a perfect masterpiece if your quality criteria are Performance and Efficiency.

Most modern Software should be written with the first two in mind, but for a DBMS, the latter are way more important.

AI is at its most useful in the early stages of a project. Imagine coming to the fucking ssh project with AI slop thinking it has anything of value to add 😂

The early stages of a project is exactly where you should really think hard and long about what exactly you do want to achieve, what qualities you want the software to have, what are the detailed requirements, how you test them, and how the UI should look like. And from that, you derive the architecture.

AI is fucking useless at all of that.

In all complex planned activities, laying the right groundwork and foundations is essential for success. Software engineering is no different. You won’t order a bricklayer apprentice to draw the plan for a new house.

And if your difficulty is in lacking detailed knowledge of a programming language, it might be - depending on the case ! - the best approach to write a first prototype in a language you know well, so that your head is free to think about the concerns listed in paragraph 1.

the best approach to write a first prototype in a language you know well

Ok, writing a web browser in POSIX shell using yad now.

writing a web browser in POSIX shell

Not HTML but the much simpler Gemini protocol - well you could have a look at Bollux, a Gemini client written im shell, or at ereandel:

https://github.com/kr1sp1n/awesome-gemini?tab=readme-ov-file#terminal

I’m going back to TurboBASIC.

Can Open Source defend against copyright claims for AI contributions?

If I submit code to ReactOS that was trained on leaked Microsoft Windows code, what are the legal implications?

what are the legal implications?

It would be so fucking nice if we could use AI to bypass copyright claims.

“No officer, i did not write this code. I trained AI on copyright material and it wrote the code. So im innocent”

If I submit code to ReactOS that was trained on leaked Microsoft Windows code, what are the legal implications?

None. There is a good chance that leaked MS code found its way into training data, anyway.

I am not sure how you arrived at “none” from your second sentence. The second sentence is exactly my point.

Alternatively then, can I just use the Microsoft source code and claim that I got it from AI? That seems to be your point here.

No. If it’s a copy, then it falls under copyright regardless of how the copy is made. The question wasn’t about copying, though.

Be aware that copyright only covers the creative elements; ie things that other people would do differently. It also doesn’t cover ideas, methods, and the like. It also doesn’t cover very short or obvious creations. So, copyright on code comes from UI design, comments, names, even the ordering of lines, functions, splitting the code into files, using shorthand or not, and so on. Snippets and even short functions are typically not copyrightable. If you have some short program that anyone would write that way, then that’s not copyrightable, beyond comments and maybe names.

I am not a programmer and I think it’s silly to think that AI will replace developers.

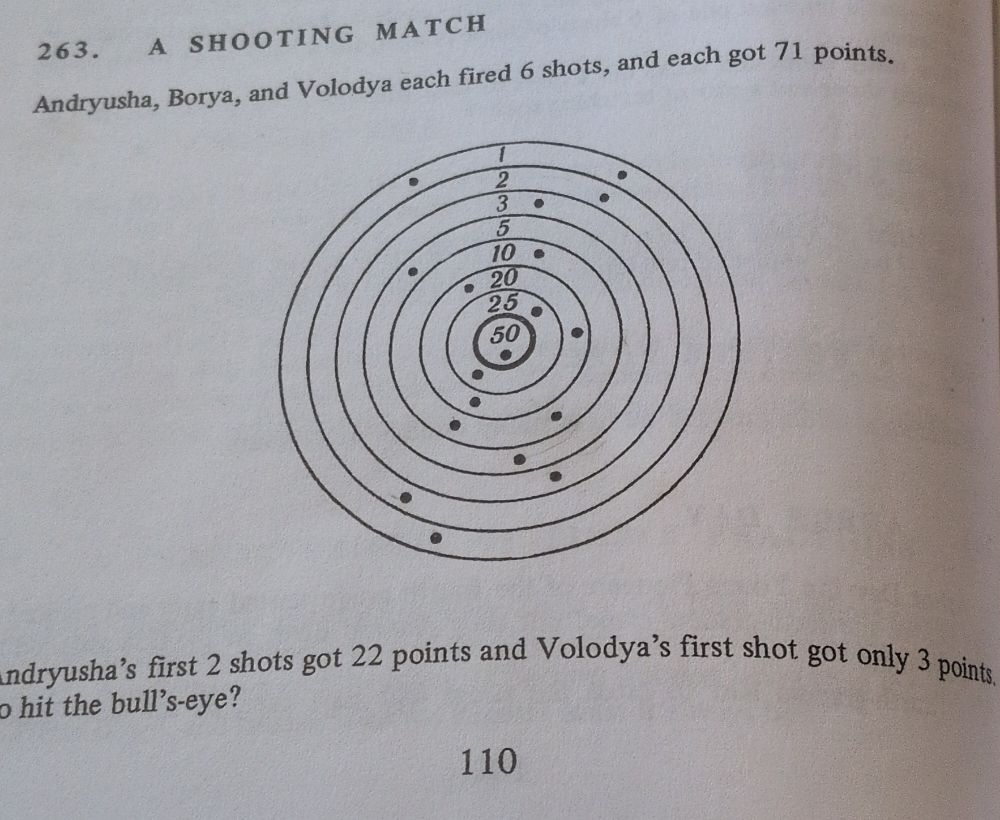

But I was working through a math problem in Moscow Puzzles with my kiddo.

We had solved it, but I wasn’t sure he got it at a deep level. So I figured I’d do something in Excel or maybe just do cut outs. But I figured I’d try to find a web app that would do this better. Nothing really came up that was a good match. But then thought, let’s see how bad AI programming can be. I’d fought with it over some excel functions and it’s been mainly useful in pointing me in the right direction, but only occasionally getting me over the finish line.

After about 6 to 8 hours of work, a little debugging, havinf teach and quiz me occasionally, and some real frustration of pointing out that the feature previously changed and re-emeged, I eventually had something that worked.

The Shooting Range Simulator is a web-based application designed to help users solve a logic puzzle involving scoring points by placing blocks on vertical number lines.

A buddy developer friend of mine said: “I took a quick scroll through the code. Looks pretty clean, but I didn’t dive in enough to really understand it. Definitely all that css BS would take me ages to do without AI.”

I don’t take credit for this and don’t pretend that this was my work, but I know my kiddo is excited to try the tool. I hope he learns from it and we bond over a math problem.

I know that everyone is worried about this tool, but moments like those are not nothing. Personally, I’m a Luddite and think the new tools should be deployed by the people’s livelihood it will effect and not the business owners.

Personally, I’m a Luddite and think the new tools should be deployed by the people’s livelihood it will effect and not the business owners.

Thank you for correctly describing what a Luddite wants and does not want.

Yes, despite the irrational phobia amongst the Lemmings, AI is massively useful across a wide range of examples like you’ve just given as it reduces barriers to building something.

As a CS grad, the problem isn’t it replacing all programmers, at least not immediately. It’s that a senior software engineer can manage a bunch of AI agents, meaning there’s less demand for developers overall.

Same way tools like Wix, Facebook, etc came in and killed the need for a bunch of web developers that operated in the range for small businesses.

As a CS grad, the problem isn’t it replacing all programmers, at least not immediately. It’s that a senior software engineer can manage a bunch of AI agents, meaning there’s less demand for developers overall.

Yes! You get it. That right there proves that you’ll make it through just fine. So many in this thread denying that Ai is gonna take jobs. But you gave a great scenario.

If AI was good at coding, my game would be done by now.

FTA: The user considered it was the unpaid volunteer coders’ “job” to take his AI submissions seriously. He even filed a code of conduct complaint with the project against the developers. This was not upheld. So he proclaimed the project corrupt. [GitHub; Seylaw, archive]

This is an actual comment that this user left on another project: [GitLab]

As a non-programmer, I have zero understanding of the code and the analysis and fully rely on AI and even reviewed that AI analysis with a different AI to get the best possible solution (which was not good enough in this case).

who makes a contribution made by aibot514. noone. people use ai for open source contributions, but more in a ‘fix this bug’ way not in a fully automated contribution under the name ai123 way

Counter-argument: If AI code was good, the owners would create official accounts to create contributions to open source, because they would be openly demonstrating how well it does. Instead all we have is Microsoft employees being forced to use and fight with Copilot on GitHub, publicly demonstrating how terrible AI is at writing code unsupervised.

Bingo

Yes, that’s exactly the point. AI is terrible at writing code unsupervised, but it’s amazing as a supportive tool for real devs!

Microsoft has set up copilot to make contributions for the dotnet runtime https://github.com/dotnet/runtime/pull/115762 I’m sure maintainers spends more time to review and interact with copilot than it would have to write it themselves

Microsoft is doing this today. I can’t link it because I’m on mobile. It is in dotnet. It is not going well :)

My theory is not a lot of people like this AI crap. They just lean into it for the fear of being left behind. Now you all think it’s just gonna fail and it’s gonna go bankrupt. But a lot of ideas in America are subsidized. And they don’t work well, but they still go forward. It’ll be you, the taxpayer, that will be funding these stupid ideas that don’t work, that are hostile to our very well-being.

Mostly closed source, because open source rarely accepts them as they are often just slop. Just assuming stuff here, I have no data.

And when they contribute to existing projects, their code quality is so bad, they get banned from creating more PRs.

Creator of curl just made a rant about users submitting AI slop vulnerability reports. It has gotten so bad they will reject any report they deem AI slop.

So there’s some data.

Ask Daniel Stenberg.

As a dumb question from someone who doesn’t code, what if closed source organizations have different needs than open source projects?

Open source projects seem to hinge a lot more on incremental improvements and change only for the benefit of users. In contrast, closed source organizations seem to use code more to quickly develop a new product or change that justifies money. Maybe closed source organizations are more willing to accept slop code that is bad but can barely work versus open source which won’t?

Baldur Bjarnason (who hates AI slop) has posited precisely this:

My current theory is that the main difference between open source and closed source when it comes to the adoption of “AI” tools is that open source projects generally have to ship working code, whereas closed source only needs to ship code that runs.

That’s basically my question. If the standards of code are different, AI slop may be acceptable in one scenario but unacceptable in another.

Maybe closed source organizations are more willing to accept slop code that is bad but can barely work versus open source which won’t?

Because most software is internal to the organisation (therefore closed by definition) and never gets compared or used outside that organisation: Yes, I think that when that software barely works, it is taken as good enough and there’s no incentive to put more effort to improve it.

My past year (and more) of programming business-internal applications have been characterised by upper management imperatives to “use Generative AI, and we expect that to make you nerd faster” without any effort spent to figure out whether there is any net improvement in the result.

Certainly there’s no effort spent to determine whether it’s a net drain on our time and on the quality of the result. Which everyone on our teams can see is the case. But we are pressured to continue using it anyway.

I’d argue the two aren’t as different as you make them out to be. Both types of projects want a functional codebase, both have limited developer resources (communities need volunteers, business have a budget limit), and both can benefit greatly from the development process being sped up. Many development practices that are industry standard today started in the open source world (style guides and version control strategy to name two heavy hitters) and there’s been some bleed through from the other direction as well (tool juggernauts like Atlassian having new open source alternatives made directly in response)

No project is immune to bad code, there’s even a lot of bad code out there that was believed to be good at the time, it mostly worked, in retrospect we learn how bad it is, but no one wanted to fix it.

The end goals and proposes are for sure different between community passion projects and corporate financial driven projects. But the way you get there is more or less the same, and that’s the crux of the articles argument: Historically open source and closed source have done the same thing, so why is this one tool usage so wildly different?

Historically open source and closed source have done the same thing, so why is this one tool usage so wildly different?

Because, as noted by another replier, open source wants working code and closed source just want code that runs.

When did you last time decide to buy a car that barely drives?

And another thing, there are some tech companies that operate very short-term, like typical social media start-ups of which about 95% go bust within two years. But a lot of computing is very long term with code bases that are developed over many years.

The world only needs so many shopping list apps - and there exist enough of them that writing one is not profitable.

And another thing, there are some tech companies that operate very short-term, like typical social media start-ups of which about 95% go bust within two years.

This is a very generous sentence you have made, haha. My observation is that vast majority of tech companies seem to operate unprofitably (the programming division is pure cost, no measurable financial befit) and with churning bug riddled code that never really works correctly.

Netflix was briefly hugely newsworthy in the technology circles because they… Regularly did disaster recovery tests.

Edit: Netflix made news headlines because someone decided that Kevin in IT having a bad day shouldn’t stop every customer from streaming. This made the news.

Our technology “leadership” are, on average, so incredibly bad at computer stuff.

There are commercial open source stuff too